Stable Diffusion Video: In the fast-evolving realm of artificial intelligence, Stable Diffusion AI has emerged as a groundbreaking technology, particularly in the domain of video generation. This article explores the capabilities of Stable Diffusion AI and provides a comprehensive guide to employing its video-to-video techniques.

Transforming Videos with Stable Diffusion AI: Video to Video Stable Diffusion

“Transforming videos into animation is never easier with Stable Diffusion AI. You will find step-by-step guides for 5 video-to-video techniques in this article. The best of all: You can run them FREE on your local machine!”

The Techniques

1. ControlNet-M2M Script

The ControlNet-M2M script is one of the five techniques covered in this guide. It employs a unique approach to transform each video frame individually, leveraging the power of ControlNet.

2. ControlNet img2img

Similar to the ControlNet-M2M script, ControlNet img2img utilizes ControlNet to facilitate the transformation of video frames. This technique offers a seamless and efficient process for video-to-video conversion.

3. Mov2mov Extension

Introducing the Mov2mov extension, a powerful tool that extends the capabilities of Stable Diffusion AI. This extension provides additional functionalities for video generation, ensuring a smooth and stable diffusion process.

4. SD-CN Animation Extension

The SD-CN Animation extension is another innovative technique covered in this guide. By incorporating Stable Diffusion and ControlNet, this extension brings a new dimension to video generation, allowing for enhanced creativity and control.

5. Temporal Kit

The Temporal Kit is a key player in the Stable Diffusion AI toolkit. With its specialized features, it contributes to the transformation of videos into dynamic and engaging animations, making it an essential component of video-to-video techniques.

“At the end of the article, I will survey other video-to-video methods for Stable Diffusion.”

Stable Video Diffusion: A Milestone in Generative AI

“Today, we are releasing Stable Video Diffusion, our first foundation model for generative video based on the image model Stable Diffusion.”

Representing a significant leap forward, Stable Video Diffusion is now available in the research preview. The code for this state-of-the-art generative AI video model is accessible on the GitHub repository, and the required weights can be found on the Hugging Face page. For a deeper understanding of the technical capabilities, refer to the accompanying research paper.

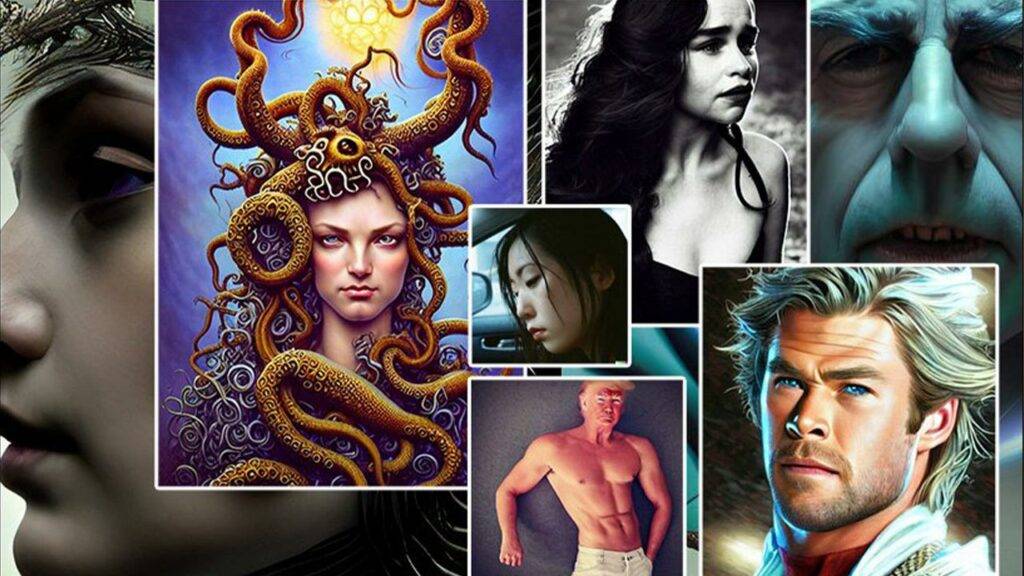

A Glimpse into Multi-View Generations

To showcase the prowess of the finetuned video model, here are some sample multi-view generations. These visuals provide a glimpse into the capabilities and potential applications of Stable Video Diffusion.

Unlocking Practical Applications with Stable Video Diffusion

“In addition, today, you can sign up for our waitlist here to access a new upcoming web experience featuring a Text-To-Video interface.”

Stable Video Diffusion is not just a technological marvel; it is a tool with real-world applications. By signing up for the waitlist, you gain access to an upcoming web experience featuring a Text-To-Video interface. This interface opens the door to practical applications in diverse sectors, including Advertising, Education, Entertainment, and beyond.

Stable Diffusion AI has ushered in a new era for video generation, and Stable Video Diffusion stands at the forefront of this revolution. The techniques outlined in this article provide a roadmap for enthusiasts and professionals alike to explore the potential of Stable Diffusion AI. As we move forward, the intersection of artificial intelligence and video generation promises exciting possibilities, and Stable Video Diffusion paves the way for a future where generative AI is accessible to everyone.